What inspired you to create goindex.me?

One day, after logging into one of my old Gmail accounts and clicking on some old bookmarks, I stumbled upon a graph from Google Search Console. It was the performance graph of a website I had created in 2021.

What stood out from that graph was that my website had not been indexed by Google, even after three years. I realized that if a website is not indexed by the largest search engine on the internet, it effectively does not exist, despite all the effort put into creating it.

This experience made me feel the need to take action using my development skills. I began thinking about how to address this issue, which led to the creation of Go Index Me.

How did you develop Go Index Me and shift from manual to automated methods?

The process of addressing the indexing issue started with manually clicking the “Request Indexing” button in Google Search Console. That quickly turned out to be a poor solution as Google limits the number of indexing requests sent from the web interface to 10 requests per day. I then explored the Google Indexing API as a potential solution.

Version 0.1 of the tool was developed using PHP to automate URL indexing requests to Google, marking a significant step forward in efficiently resolving the indexing problem.

Can you describe the planning process for Go Index Me’s architecture?

The focus was on the critical indexing issues faced by my web pages and the motivation to find a fast solution to prevent content from being deindexed.

I discovered the Google indexing API then I built a tiny tool to send URL indexing requests automatically.

The proof of concept stage did not include any database structures or other deeper CI/CD integrations. I just wanted to discover all edge cases and API restrictions to see how far I could take this thing.

After a tiny but successful proof of concept, the focus shifted to planning the tool’s architecture, including considerations for data structures, user authentication, and Google Search Console interaction.

How did you address the challenges such as API limits and user authentication?

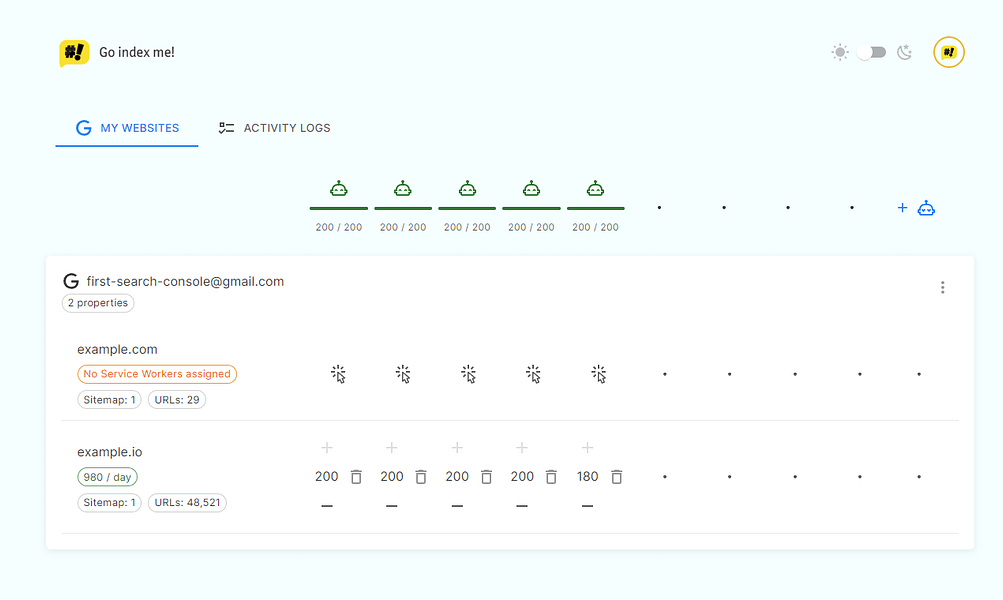

Despite facing API request limits, the determination to resolve the problem persisted. Google has a limit of 200 URL indexing requests per Project per day, but no limit per website per day. So, in theory, as long as you have enough Google Cloud Projects, you could send as many indexing requests per website per day as you wish.

Extensive research was done on handling edge cases, user authentication security, sitemaps, and understanding the limitations of Google’s API requests. Sitemaps were particularly tricky as, it turns out, most website owners create really poor sitemaps with duplicate URLs, malformed data, absurd rate limiting etc.

The API updates are handled by a CI/CD pipeline in GitLab. The production repository sends a webhook request to update the API server, which sets a flag to begin the update procedure with the next Cron task. The app uses unique time slots to avoid race conditions and optimize resource usage across all users.

What methods does goindex.me use to “politely ask” Google to index URLs?

Go Index Me uses the official Google Indexing API to send indexing requests to Google.

I mention that this is just a “polite reminder” because Google reserves the right to simply ignore these indexing requests and/or deindex your pages at any time without any notice and without any justification.

That being said, your best bet long-term is to create high quality content that other humans enjoy reading – as in, don’t write for the bots. Just gather quality backlinks and you should be fine.

How does Go Index Me ensure that websites stay indexed over time?

Google will continuously deindex pages that it deems unworthy of indexing. Write quality content and gather quality backlinks and you should be fine. But every once in a while, it doesn’t hurt to let Google know you’ve made improvements to your content and you believe it deserves re-indexing.

Go Index Me will send indexing requests for pages that were deindexed just-in-time, so you don’t need to worry about any manual actions.

How does goindex.me stay updated with changes in Google’s indexing algorithms?

Go Index Me uses two different techniques to discover the indexing state of URLs using official Google APIs. These APIs are scheduled to run every single day at predefined time slots. You can view your daily tasks by visiting this page.

Can you share any success stories or case studies from users of goindex.me?

My own website of 50,000 pages got fully indexed about 1 month after registering it with Go Index Me, I’ve shared these stats on twitter:

Everyone is free to view and share their results by visiting the Perspective tab.

What’s the tech stack for Go Index Me and why did you choose these technologies?

The app is a monolith. The API and Cron tasks were written entirely in PHP with a MongoDB document store. API updates are handled through a CI/CD pipeline in GitLab. I chose a monolithic architecture because I loathe premature optimization and unjustified complexity.

I went for PHP because I needed multi-threading and PHP is multi-threaded by default. Also, I didn’t want to deal with worker threads in Node.js. The entire app runs on a VPS behind a CloudFlare proxy for DDoS protection.

The web application is an SSG – a statically generated site. It’s a Next.js application hosted on CloudFlare pages, hydrated with API calls. The entire front-end is cached in Local Storage with Progressive Web Application workers which means 0ms response times on reloads. The app also has offline support.

SSGs are awesome! You get pure Separation of Concern out of the box, and you never need to worry about traffic spikes as CloudFlare – or any other static web hosting service – will handle that for you from a global Content Delivery Network. With an SSG, you never need to think about egress costs associated with your user interface.

What advice do you have for developers on best practices and overcoming challenges?

Don’t be afraid to establish your unique rules and disregard conventional best practices when they seem illogical.

Rapid iteration is key, especially when developing visualization tools and logs, as they can greatly aid in understanding your code’s performance.

While creating custom tests, also maximize the use of existing frameworks and tools in your development process.

Prioritize solving your own problems first. Being your own ICP (Ideal Customer Profile) means you have the unfair advantage of zero feedback loops with product iteration.

Did you enjoy this interview on Go Index Me – Google Indexing API? I regularly interview creators who have recently launched their products. Check out additional discussions like this on BetaHunt.io. For updates, follow me on X.

Leave a Comment